Dynamic desirability: Launching Gravity, SN13’s democratization of data source selection

Our latest tool drives greater directability, giving validators and users more power to shape the competition.

Fresh, high quality data is the new gold. From journalism and market analysis to trading signals and model training data, it has a huge variety of potential uses. Subnet 13 (SN13) has demonstrated its capabilities for data scraping at scale – it scrapes an estimated 15 million social media posts per hour or around 350 million per day. In just a few months, it has produced the largest open-source social media dataset in the world, which continues to grow.

This is made possible by a key feature of SN13’s design: data desirability. The data desirability mechanism rewards miners for scraping data that match certain labels, such as posts that contain specific twitter hashtags or subreddits. For instance, if a large desirability value is assigned to a hashtag such as #bittensor, the SN13 miners will be highly rewarded for scraping posts that contain that hashtag. The inevitable result is that the network will preferentially scrape data of that type, leading to rapid procurement of a large dataset matching the qualities labelled as desirable.

Historically, the data desirabilities have been defined (statically) by Macrocomos in the SN13 codebase. This centrally-mandated design for designating data desirability was chosen to ensure agreement across all the validators upon the value of different data, which is essential for the Bittensor consensus mechanism. Yet this limits desirability to reflecting only the preferences of the subnet owner. Our vision for the future of SN13 is to enable validators to ‘drive’ the subnet by voting on which data sources and labels are most desirable: dynamic desirability.

That’s why we’re excited to announce the MVP launch of Gravity, our directable SN13 service. Built upon the rock-solid SN13 incentive mechanism and driven by dynamic desirability, Gravity gives validators an opportunity to generate revenue from their bandwidth by allowing users to set desirabilities on their behalf. Validators use their stake to denote what data they are interested in. The more you stake, the more of a say you get in how our datasets are shaped. By changing a few lines of code, custom internet-scale datasets can be assembled almost overnight.

How dynamic desirability works

Dynamic Desirability has three key facets:

Democratic: The desirability of all data sources is determined by stake-weighted fractional voting amongst validators

Dynamic: The desirability is updated in real time in such a way that enables SN13 miners to be directable by validators with minimal latency

Transparent: Everyone has real time access to the shared global state of the network

The use of stake-weighted fractional voting is a natural choice as it effectively provides validators with bandwidth proportional to their delegated stake. Moreover, fractional voting allows validators to spread their votes across many labels via normalized weights. This introduces an interesting trade-off for validators as they can opt to apply their bandwidth either narrowly or broadly. In the narrow scenario, validators use their entire vote on a single data label (e.g. weight=1 on #bittensor). Conversely, in the broad scenario validators spread their vote very thinly across many preferences. This may be useful when validators want more coverage over a given topic (such as the upcoming US elections). The voting strategies that are employed by validators will likely be unique to their specific use-cases.

There is also the question of how to combine the weighted votes. Rather than forcing conformity between validators using a mechanism such as Yuma Consensus, we embrace the open-ended system design and define the total desirability of each label as the weighted sum of votes across all validators. This means that the total set of desirable labels will be the union of all validator labels. Most likely, miner efforts will be mostly focused on the labels with the highest weights (provided that these sources are not exhausted).

The total data desirability, which reflects the preferences of all validators is given by the following equation:

A robust method of collecting and aggregating validator preferences for different data labels necessitates the creation and sharing of the global state. With a global set of data desirabilities, miners know what data to scrape and validators can agree on how to reward it. This provides a persistent and public way to direct the miners' efforts while maintaining a fair competition. Importantly, the desirabilities must be modifiable by validators in real time so that all network participants can adapt to an ever-changing reward landscape driven by real-world demand.

The naïve approach to creating such a global state is simply to embed the validator preferences directly into the codebase by requiring them to make pull requests or similar. However, this is a cumbersome solution as it requires constant manual intervention, is error-prone and is unsafe. Most importantly, this does not adhere to the decentralized principles of Macrocosmos.

Alternatively, we could make use of organic scoring within each validator so that they can update their desirability indices independently of each other. The primary drawback to this approach is that it is not consensus-preserving as there is no shared global state for validators. Consequently, each validator will be setting weights on miners inconsistently, which would damage the validator trust and divide the miners efforts ineffectively.

Instead, we should enable validators to broadcast their preferences to the entire network in an automated and regular fashion. One way which we can do this is by using a shared database to create an asynchronous, persistent and transparent record of the voting history. Then, everyone in the network can easily reconstruct the full desirability index by looking for recent changes to the distributed system state.

Commit Hashes on Bittensor

Validators commit their preferences to the Bittensor chain, which are viewed by others

Bittensor supports the use of small on-chain payloads called commit hashes. These hashes are used in Macrocosmos’ subnets 9 and 37 by miners to notify validators of their model improvements, and have been very successful. This approach is effective because participants can securely update their state in an asynchronous fashion, and the shared global state can be accessed by anyone by reading the chain and combining the individual states.

In order to reduce unnecessary chain bloat, commit hashes are both rate limited and also size limited. We investigated the use case for commit hashes as a potential way to produce a transparent global state for Dynamic Desirability. The rate limit for commit hashes is 100 blocks which is approximately 20 minutes, which would be the maximum frequency at which validators can update their choices. We believe that this is suitable for our data scraping use case as the realistic timescale over which miners will scrape the required data volumes will be hours and days. However, commit hashes are restricted to messages of no more than 128 bytes in size. We estimate that this would limit the number of labels that validators can vote on to be at most around 7, which is very few. While commit hashes are not ideal for storing the validator preferences, they remain a suitable way for validators to signal to the network that they have updated their preferences.

Shared Public Repository

Validators commit their preferences to a GitHub repository, which anyone can read from

We can enable the validators to specify their preferences by storing them within a public github repository. Within the repository, the validators each have their own JSON file which contains their preferences.

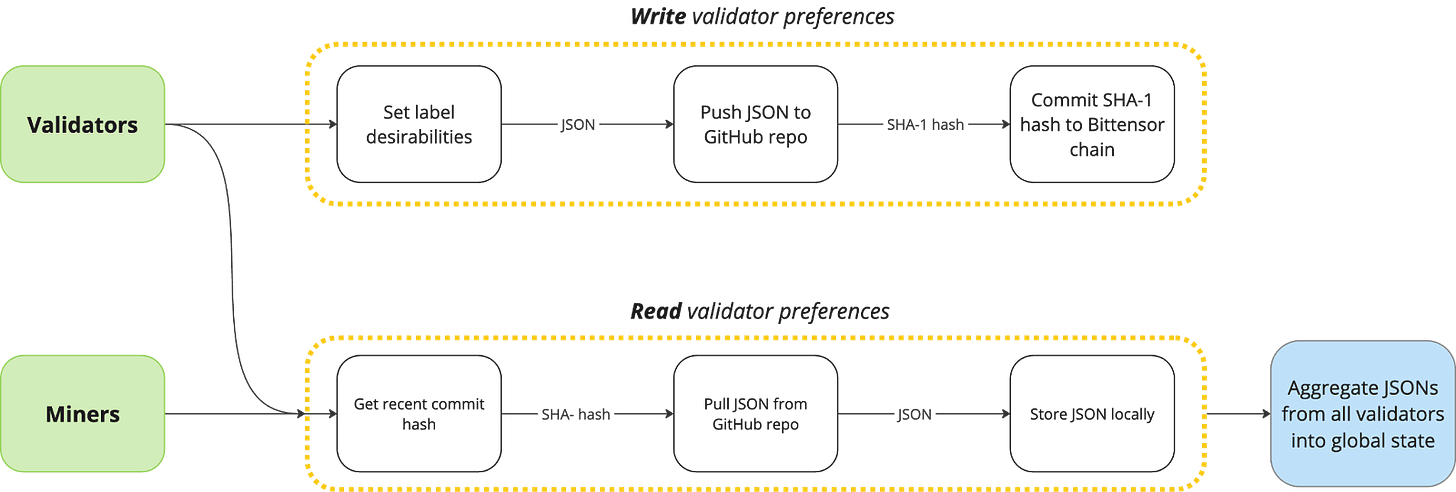

Validator update their preferences by:

Modify local JSON file with their preferences

Commit JSON file to GitHub and record SHA-1 hash of their commit

Publish their SHA-1 hash to the Bittensor chain

This avoids the size limitations of commit hashes and enables real time updates. We choose to use commit hashes in addition to GitHub version control because this solves the problem of authenticating the author of the GitHub commits. We note that this is preferable to Macrocosmos owning the API service as it is decentralized and open source.

Anyone can then construct the global state by carrying out the following steps:

Query Bittensor chain for most recent validator commit hashes

Extract the SHA-1 hash from the commit hashes and use it to locate the current validator JSON file

Download the JSON file

Apply the aggregation formula to the JSON file for all validators

Note that steps 1-3 must be carried out for all validators to get their current preferences. Steps 2 and 3 can be skipped for validators that have not updated their preferences since the last time the check was performed. Diagram 1 shows both the read and write operations for Dynamic Desirability.

Diagram 1: Validators upload their data desirabilities as a JSON file to GitHub and publish their SHA-1 hash

With this substantial change to the subnet’s design, the means by which validators can shape miner behavior and earn revenue have expanded. Dynamic Desirability exemplifies SN13’s propagation of flexibility, openness, and competitiveness: three driving factors behind Bittensor’s success.

We’ll publish more about the processes behind Gravity, its intentions, and how users can interact with the frontend - subscribe to this newsletter to receive the next instalment.