Chain-of-thought: SN1 enhanced with multi-step reasoning

Multi-step chain-of-thought reasoning can deliver impressive performance improvements - which is why we’re now launching these capabilities on SN1.

There's a renewed excitement around LLMs. DeepSeek R1's success has drawn attention to open-source approaches - and especially to how these approaches achieve such impressive results.

While there are many factors at play, the key element appears to be multi-step reasoning, also known as Chain of Thought (CoT), where a model can ‘think through’ its answer before returning its final result. This is one of several exciting techniques that involve using more test-time compute — giving models more time to reason during their responses. Not only does it provide better results, but it also lets you peek behind the curtain and see how the LLM reached its conclusion.

What makes CoT particularly powerful is that the LLM can catch and correct mistakes as it generates its thinking steps, and can explore various different approaches before presenting a response.

Subnet 1, Apex, has been experimenting with this for some time, and will release this update to the public on February 20th – the first time any form of test time compute has been implemented within Bittensor.

Multi-step and chain-of-thought reasoning on Bittensor

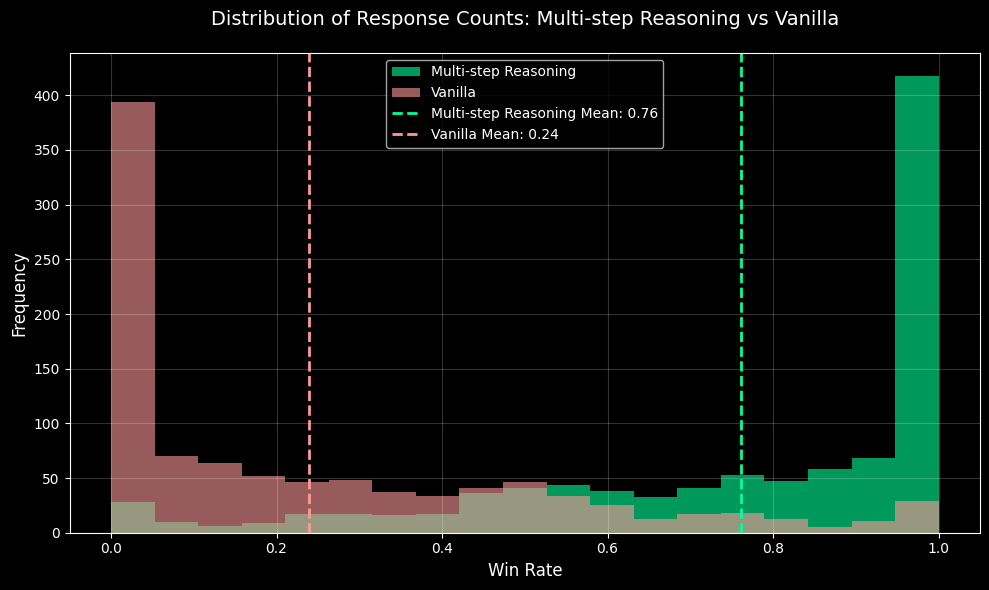

Incentivized test-time compute is a fascinating challenge, but especially so in such a dynamic ecosystem. SN1 validators leverage CoT to generate a top quality answer. Miners are then sent the same challenge and must send an answer within a significantly constrained timeframe, preventing them from using the same reasoning steps. The challenge miners face is to match the quality of the enhanced CoT responses in a fraction of the time, creating a competition to generate high-quality outputs as quickly as possible.

By scoring miners based on their similarity to the validator’s answer (which uses reasoning steps and is therefore both more time intensive and expensive to compute), SN1 incentivizes miners to produce workflow that outperforms off-the-shelf models in a fraction of the time.

The magic lies in forcing the miners to push their models to catch up with (and potentially match) the validators whilst given less time. If they want to win, they must get creative - with insufficient time to produce any reasoning steps, merely matching a validator-response is almost impossible, even if you’re using the same LLM. We call this task Advanced Reasoning as miners replicate validators’ multi-step reasoning results without adding extra steps, thus improving the model’s single-step capabilities.

This adversarial environment pushes miners to innovate and eventually match validators in performance. When this occurs, validators can simply adopt the models miners are using, creating a cascading effect, further pushing miners to outperform. It’s an interactive process that should lead to consistently stronger LLMs on SN1, all without requiring any new innovation on the validator side.

Chain of Thought in the product

Along with Advanced Reasoning, we’re also implementing a classical CoT functionality to SN1’s soon-to-be-released chat application. This integration performs similarly to GPT o1 and explains its reasoning steps as it thinks, allowing users to follow the LLM’s logic.

Refining our inference tasks

Multi-step reasoning is changing how SN1’s inference tasks work. It starts with our miners running the models we expect them to, in order to create reference answers that can get used for thinking steps. We force CoT and give them a time-span for an answer, before taking their thinking steps and using them as distinct inference tasks, ready to be given to other miners.

We need only spot-check a handful of miner-answers, because each is layered with thinking steps. These can be used to further check how well each miner is innovating with their own models, essentially meaning the miners check their own answers.

The upshot? Less resource-intensive work for validators and consistently better responses by miners, making a more enticing environment for people to join.

How to use the newly updated SN1

You’ll be able to play with this updated version of SN1 on February 20th. It’ll feature not only multi-step reasoning and CoT, but also the ability to see what the subnet is ‘thinking’ about as it produces your result.

While some subnets run LLMs that have CoT implemented on them, this is the first time that CoT and multi-step reasoning have been incentivized directly within the Bittensor network, where validators and miners are active participants in the process.

This, plus the ability for miners to surpass validators, and the use of multi-step reasoning in inference tasks, dramatically reshapes SN1’s landscape - propelling Apex and Bittensor to the forefront of the latest LLM breakthroughs.

To discuss these updates with our community, visit our Discord and Telegram.