AlphaFold 3 is a huge leap forward - but for researchers, restrictions remain

Deep Mind's latest protein-folding model is impressive, yet accessibility is still constrained by cost of compute. Distributed alternatives can deliver comparative models more efficiently.

by Dr. Steffen Cruz

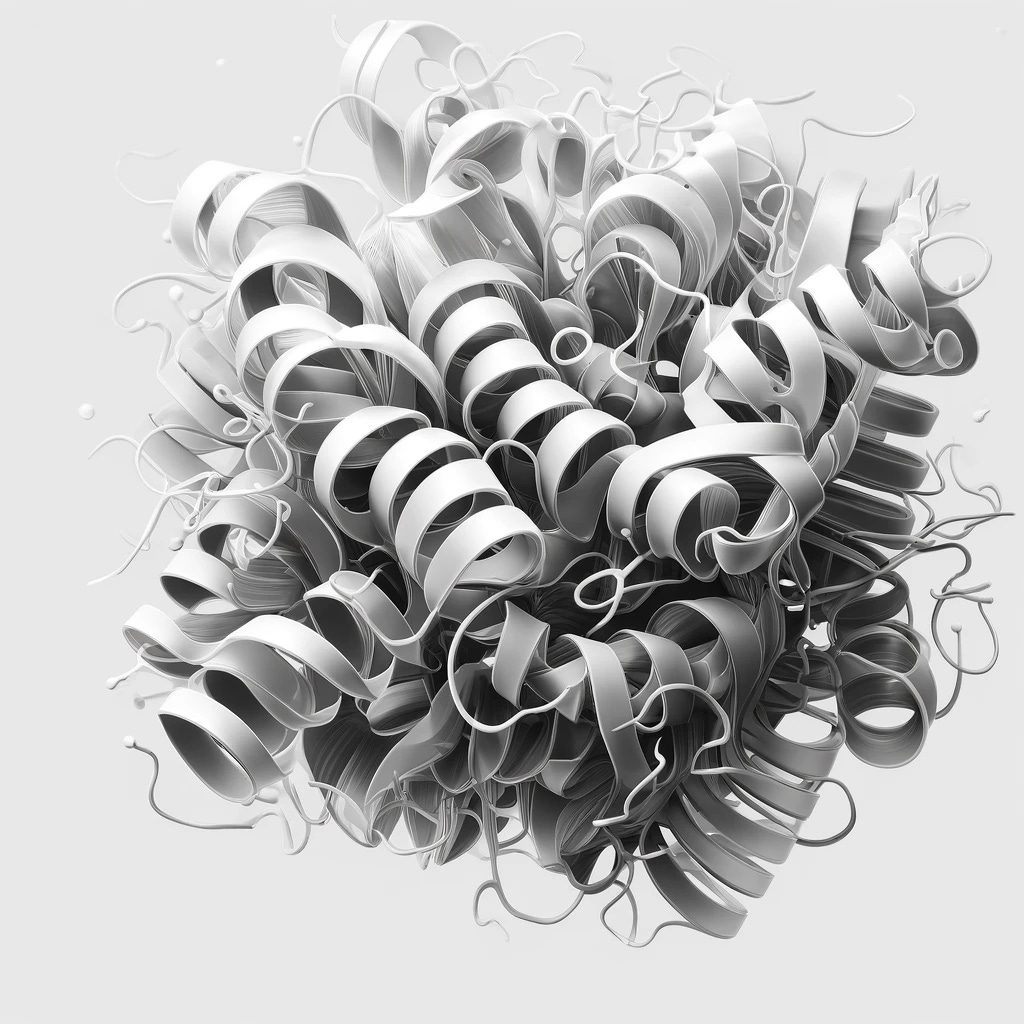

Of all the academic feats accomplished so far by machine learning models, perhaps the most impressive is protein-folding.

Long considered one of the most intractable challenges in biology - and a gateway into the future of biotech - protein-folding was chosen by DeepMind precisely because it was notoriously difficult to solve.

AlphaFold (2018) and AlphaFold2 (2020) achieved more than all previous efforts combined. And, last week, AlphaFold3 was released by DeepMind in collaboration with Isomorphic Labs.

Building on the foundations laid by earlier models, AlphaFold3 predicts the structure and interactions of proteins with other molecules. ‘For some important categories of interaction we have doubled prediction accuracy,’ the announcement post claimed.

The latest iteration is well worth celebrating. Having developed a Subnet devoted to the incentivisation of protein-folding machine learning models, we appreciate just how complex this field really is.

Yet significant limitations remain - many of which are not to do with the model itself, but with how the model is distributed and accessed. Users are constricted to 5,000 tokens. With one token per ion, ligand, base, residue, and so on, this effectively limits the size of the protein, and researchers may find their investigations hampered.

Moreover, users face a second restriction: a limited number of jobs per day, which at present appears to be 10. Even though the AlphaFold3 pipeline samples five predictions per seed, these limitations constrain the pace at which research can progress.

However, these aren’t oversights of the creators, but reflections of the enormous cost of compute that centralized models incur. AlphaFold3’s technical prowess is undoubtable, but distributing access in an economical way is a separate challenge. Providing a state-of-the-art protein-folding model, at the scale, speed, and affordability that academics and start-ups need is hard when the cost of centralized compute is so high.

In contrast, Macrocosmos’ protein-folding system is far more embryonic than AlphaFold3. However, our protocol’s open-source, decentralized network of distributed compute can provide cutting-edge machine learning models - including protein-folding - without incurring the costs of centralized proprietary technologies.

Launched the week of May 13th 2024, Subnet 25 offers researchers a protein-folding model on different terms. Rather than 10 jobs per day, the only limitation is the number of miners - which, thanks to our rewarding incentives, should scale with demand. Like any functioning free market, more jobs should attract more workers, allowing the model to move with demand.

Similarly, while AlphaFold3 samples five predictions per seed, Subnet 25 is only limited by the miner batch size - which already stands at 10, and will soon be increased further.

These advantages aren’t attributable to superior skill or ability - they are consequences of the natural efficiencies offered by distributed, open systems, where incentives are aligned to match supply with demand in a transparent, rewarding, and permissionless system.

Even so, Subnet 25 is in its infancy. These benefits are yet to be realized. That’s why we invite researchers, academics, and experts of all kinds to test it for themselves - and contribute not just to state-of-the-art technology, but to its distribution, too.

Dr. Steffen Cruz - Co-founder & CTO, Macrocosmos.